Blind Image Restoration Based on Cycle-Consistent Network

Shixiang Wu, Chao Dong, Yu Qiao

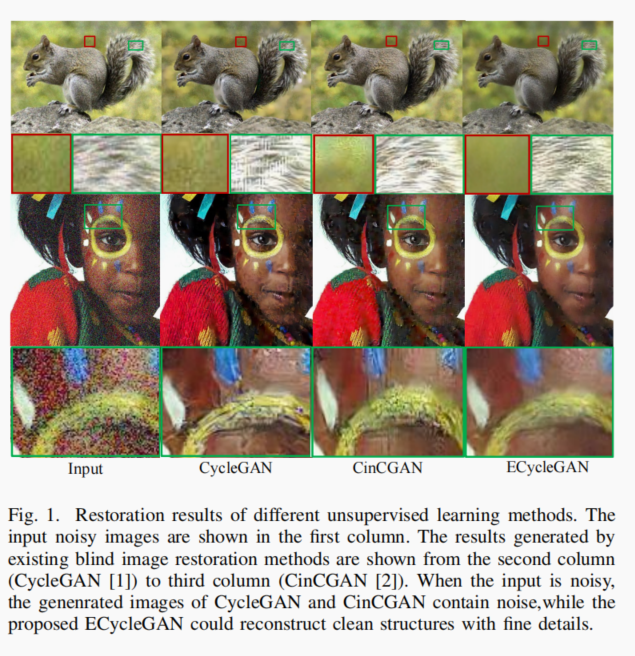

This paper studies the blind image restoration where the ground truth is unavailable and the downsampling process is unknown. This complicated setting makes supervised learning and accurate kernel estimation impossible. Inspired by the recent success of image-to-image translation, this paper resorts to the unsupervised Cycle-consistent based framework to tackle this challenging problem. Different from the image-to-image task, the fidelity of reconstructed image is important for image restoration. Therefore, to improve the reconstruction ability of the Cycle consistent network, we make explorations from the following aspects. First, we constrain low-frequency content in data to preserve the content of output from LR input. Second, we impose constraint on the content of training data to provide better supervision for discriminator, helping to suppress high frequency artifacts or fake textures. Third, we average model parameters to further improve the generated image quality and help with model selection for GAN-based methods. Since GAN based methods tend to produce various artifacts with different models, model average could realize a smoother control of balancing artifacts and fidelity. We have conducted extensive experiments on real noise and super resolution datasets to validate the effectiveness of the above techniques. The proposed ECycleGAN also demonstrates superior performance to SOTA methods in two applications – blind SR and blind denoising.