Activating More Pixels in Image Super-Resolution Transformer

Xiangyu Chen, Xintao Wang, Jiantao Zhou, Yu Qiao, Chao Dong

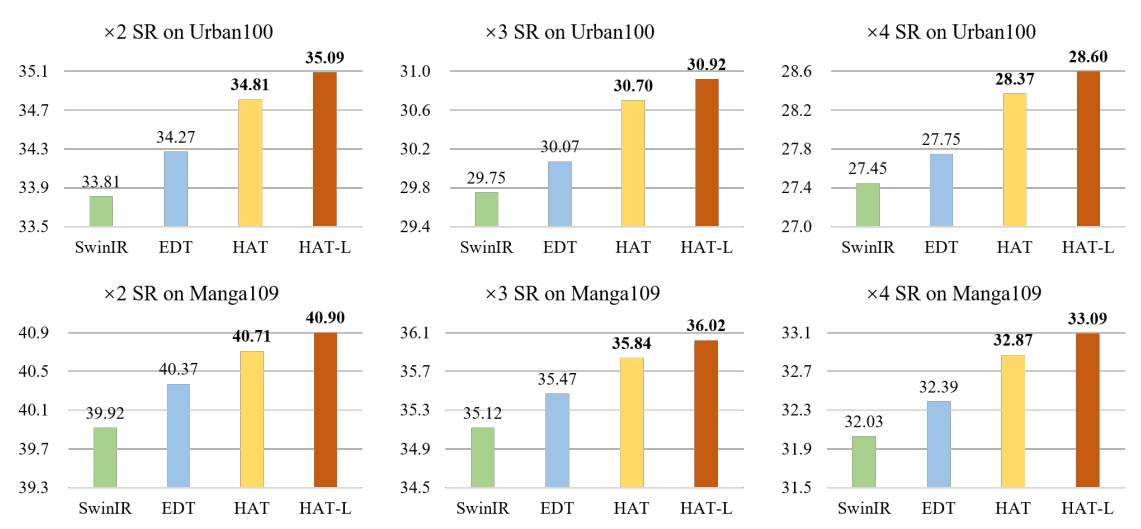

Transformer-based methods have shown impressive performance in low-level vision tasks, such as image super-resolution. However, we find that these networks can only utilize a limited spatial range of input information through attribution analysis. This implies that the potential of Transformer is still not fully exploited in existing networks. In order to activate more input pixels for reconstruction, we propose a novel Hybrid Attention Transformer (HAT). It combines channel attention and self-attention schemes, thus making use of their complementary advantages. Moreover, to better aggregate the cross-window information, we introduce an overlapping cross-attention module to enhance the interaction between neighboring window features. In the training stage, we additionally propose a same-task pre-training strategy to bring further improvement. Extensive experiments show the effectiveness of the proposed modules, and the overall method significantly outperforms the state-of-the-art methods by more than 1dB.